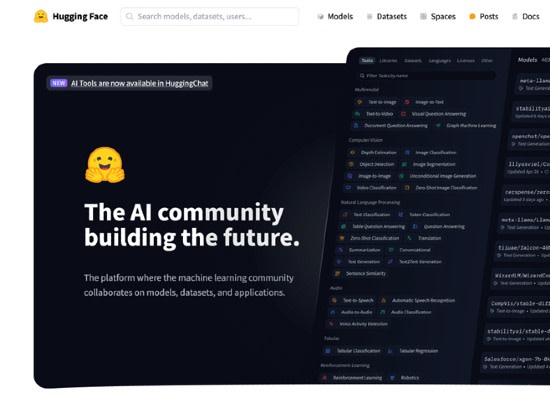

Hugging Face might have started as a playful emoji, but today, it represents something much more significant in the world of machine learning. It’s the fastest-growing platform and community for AI builders, offering everything from pre-trained models to datasets, and an active community that thrives on open collaboration.

In this introduction to Hugging Face, we’ll take a look at the platform – what it is, why it’s become so popular in the machine learning community, and how you can quickly start using it for your own projects.

What is Hugging Face? 🤗

Hugging Face is both a platform and a community for AI builders. The two go hand in hand: the platform offers an easy way to access and share open-source machine learning models, while the community contributes to and actively engages with the platform, making it a dynamic and constantly evolving ecosystem.

“For researchers, Hugging Face is the place to publish models and collaborate with the community. For data scientists, it’s where you can explore over 300k off-the-shelf models for any machine learning task and create your own. For software developers, it’s where you can turn data and models into applications and features.”

– Emily Witko, Head of Diversity, Equity, Belonging & Employee Experience at Hugging Face

Guided by a mission to democratize machine learning, Hugging Face lowers the entry barriers for novices while providing valuable tools for experienced individuals.

A Tour of the Hugging Face Platform

The Hugging Face platform, also known as the Hugging Face Hub, is an online space where you’ll find over 800k models, 190k datasets, and 55k demo apps (Spaces). All these resources are open-source and publicly accessible, making the Hub a central place for anyone interested in exploring, experimenting, collaborating, and building with machine learning technology.

Hugging Face landing page – from the navigation bar you can access Models, Datasets and Spaces

Models 🤖

As of August 2024, there are 818,208 models available for public use on the Hugging Face platform – a staggering number, but one that’s easy to navigate thanks to robust filtering and sorting options. When searching for a model, I recommend you filter by "Tasks" and sort by metrics like "Most Downloads" or "Trending" to discover either tried-and-true models or the hottest new releases.

Models webpage

Pro tips for NLP practitioners

When working on Natural Language Processing (NLP) tasks such as summarization, I’ve found that the most effective models aren’t always categorized under the specific task name (e.g., "Summarization"). Instead, broader categories like "Text Generation" often house more powerful models. This is particularly true because companies like Google, Meta, Mistral and Microsoft frequently publish their latest, cutting-edge models in these broader categories. This highlights the importance of exploring different filter terms and categories to find the best model for your specific needs.

As you become more familiar with Hugging Face, you’ll start to recognize contributors – whether they are individuals, groups, or corporations – who consistently publish high-quality models. In my case, I often turn to models by top contributors like TheBloke, MaziyarPanahi and bartowski, as their work aligns well with my NLP projects.

For those looking to optimize model performance, especially in terms of speed and efficiency, consider exploring "GGUF" models under the "Libraries" filter. These models have been quantized, meaning their weights have had their numerical precision reduced (e.g., from float32 to float16). While this reduction in precision makes the models smaller and faster at inference time, it does come with a slight trade-off in quality, which may be negligible depending on the application.

Datasets 📊

Hugging Face hosts over 190,000 datasets, offering ample data for training and testing models. To find a suitable dataset, I suggest you filter by modality, size, format, and task. While I haven’t used Hugging Face datasets extensively – opting instead for datasets found through academic papers – those available on the platform are often highly curated and useful for a variety of projects.

Datasets webpage

Spaces 🚀

Spaces is where Hugging Face contributors publish apps and APIs to allow the public to leverage available models for specific tasks. Handily, Hugging Face hosts the apps and models on the cloud, so a user doesn’t need to download a model to test it nor are they restricted by their local GPU resources. Spaces is particularly useful for demos, less so for production-ready apps.

Space webpage

Example: "AI comic factory" for creating comic pages from text prompts

Example: "whisper" for audio transcription

Repositories 🗂️

Each model, dataset, or space on Hugging Face is linked to a Git-based repository created by the contributor. These repositories are why Hugging Face is often referred to as the "GitHub of Machine Learning". Creating a repository is necessary for contributors but not for users who simply want to explore and use the available resources.

Why Hugging Face Has Won So Many Hearts ❤️

One of the main reasons Hugging Face is so beloved by the machine learning community is its user-friendly design. The platform’s open-source libraries abstract much of the complexity involved in working with deep learning models, making the technology accessible even to those without extensive experience.

The Transformers library is particularly noteworthy: With over 130k stars and 25,9k forks on GitHub, it’s the most widely adopted library for NLP. It includes a wide range of functionalities, such as loading models, tokenization, training, and inference, facilitating experimentation with different models and architectures.

Conclusion: Machine Learning for All

Hugging Face has rapidly grown into a leading platform and vibrant community for machine learning, driven by values of simplicity, diversity, and decentralization. Whether you’re a researcher, data scientist, or software developer – regardless of your experience level – Hugging Face provides an extensive array of tools to advance your AI projects. And by lowering barriers and fostering collaboration, Hugging Face is nobly attempting to make the powerful technology that is machine learning widely accessible.